Drag queens and Artificial Intelligence: should computers decide what is ‘toxic’ on the internet?

crédito: Reprodução/Vh1

By Alessandra Gomes, Dennys Antonialli e Thiago Dias Oliva

Internet social media platforms like Facebook, Twitter and YouTube have been under constant pressure to increasingly moderate content. Due to the sheer volume of third-party content shared on their services, these companies have started to develop artificial intelligence technologies to automate decision-making processes regarding content removals. These technologies typically rely on machine learning techniques and are specific to each type of content, such as images, videos, sounds and written text. Some of these AI technologies, developed to measure the “toxicity” of text-based content, make use of natural language processing (NLP) and sentiment assessment to detect “harmful” text.

LGBTQ speech: can “toxic” content play a positive role?

While these technologies may represent a turning point in the debate around hate speech and harmful content on the internet, recent research has shown that they are still far from being able to grasp context or to detect the intent or motivation of the speaker, failing to recognize specific contents as socially valuable – what may include LGBTQ speech aiming to reclaim words usually employed to harass members of the community. Additionally, there is a significant body of research in queer linguistics indicating the use of “mock impoliteness” is valuable to help LGBTQ people cope with hostility [1] [2] [3] [4].

Some of these studies have focused specifically on the communication styles of drag queens. By playing with gender and identity roles, drag queens have always been important and active voices in the LGBTQ community. They typically use language that can be considered harsh or impolite to construct their forms of speech. When looking at their communication styles, scholars have identified that “a sharp tongue is a weapon honed through frequent use, and is a survival skill for those who function outside genteel circles […] [they have] been using perception and quick formulation to demand acceptance – or to annihilate any who would deny it. Such [in-group] play is quite literally, self-defense.” In that sense, “[…] utterances, which could potentially be evaluated as genuine impoliteness outside of the appropriate context, [but] are positively evaluated by in-group members who recognize the importance of ‘building a thick skin’ to face a hostile environment”.

How is AI being used to detect “toxic” content?

There are numerous AI tools currently being develop to understand, identify and target “toxic” or “harmful” content on the internet. Our research focuses on Perspective, an AI technology developed by Jigsaw (owned by Alphabet Inc., the conglomerate to which Google belongs), that measures the perceived levels of “toxicity” of text-based content. Perspective defines “toxic” as “a rude, disrespectful, or unreasonable comment that is likely to make you leave a discussion”. Accordingly, their model was trained by asking people to rate internet comments on a scale from “very toxic” to “very healthy”. The levels of perceived toxicity indicate the likelihood of a specific content to be considered as “toxic”.

By training their algorithm to learn what pieces of content are more likely to be considered as “toxic”, Perspective may be a useful tool to make automated decisions about what should stay and what should be taken down from the internet platforms. Such decisions are sensitive and political as they have clear implications on free speech and human rights. Over the years, the debate around the role of internet platforms as content curators have become significantly more complex, especially when it comes to the use of AI.

How was our research carried out?

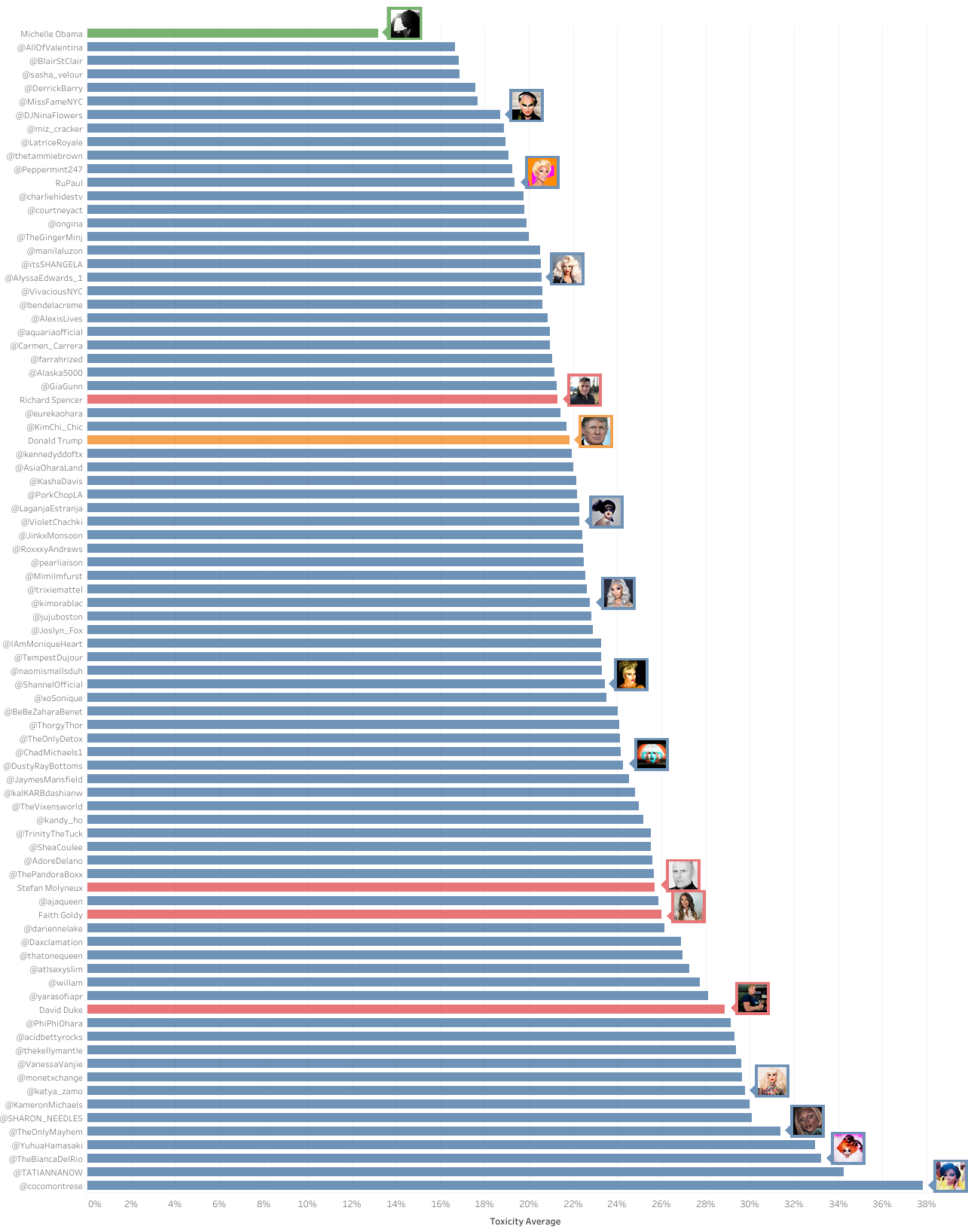

We used Perspective’s API to measure the perceived levels of toxicity of prominent drag queens in the United States and compared them with the perceived levels of toxicity of other prominent Twitter users in the US, especially far-right figures. The drag queens’ Twitter profiles were selected out of former participants of the TV reality show “RuPaul’s Drag Race“, popular in the US and abroad, especially among the LGBTQ community.

After getting access to Twitter’s API, we collected tweets of all former participants of RuPaul’s Drag Race (seasons 1 to 10) who have verified accounts on Twitter and who post in English, amounting to 80 drag queen Twitter profiles.

We used Perspective’s production version 6 dealing with “toxicity”. We only used content posted in English, so tweets in other languages were excluded. We also collected tweets of prominent non-LGBTQ people (Michelle Obama, Donald Trump, David Duke, Richard Spencer, Stefan Molyneux and Faith Goldy). These Twitter accounts were chosen as control examples for less controversial or “healthy” speech (Michele Obama) and for extremely controversial or “very toxic” speech (Donald Trump, David Duke, Richard Spencer, Stefan Molyneux and Faith Goldy). In total, we collected 116,988 tweets and analysed 114,204 (after exclusions).

A data scraping Python algorithm was developed to collect and analyze all relevant tweets in four steps. First, the tweets were collected and stored on a CSV dataset. Only three attributes were stored: the author’s name, the tweet’s time information and its content. A tweet identifier (an ID auto increment number) was also created and stored. The following step involved the cleaning and pre-processing of the content, including the removal of URLs of images, as well as special characters (such as ’, “, ”, …) and the translation of emojis into text. Then, the tweets were submitted to Perspective’s API in order to obtain their toxicity probability. In the last step, the probabilities returned by the API were stored on a new CSV dataset with the tweets’ content and ID. With this new dataset it was possible to know how “toxic” each of the analyzed tweet is according to Perspective’s analysis.

What were the main results?

In the chart below (click to enlarge image), the drag queens are in blue; white supremacists in red; Michelle Obama in green and Donald Trump, in orange.

The results indicate that a significant number of drag queen Twitter accounts were considered to have higher perceived levels of toxicity than Donald Trump’s and white supremacists’ ones. On average, drag queens’ accounts toxicity levels ranged from 16.68% to 37.81%, while white supremacists’ averages ranged from 21.30% to 28.87%; Trump’s lies at 21.84%.

We have also run tests measuring the levels of toxicity of words commonly found in the tweets from drag queens. Most of these words had significantly high levels of toxicity: BITCH – 98.18%; FAG – 91.94%; SISSY – 83.20%; GAY – 76.10%; LESBIAN – 60.79%; QUEER – 51.03%; TRANSVESTITE – 44.48%.

That means that regardless of context, words such as “gay”, “lesbian” and “queer”, which should be neutral, are already taken as significantly “toxic”, which points to important biases in Perspective’s tool. In addition, even though other words such as “fag”, “sissy” and “bitch” might be commonly perceived as “toxic”, the literature on queer linguistics indicates how their use by the members of the LGBTQ community plays an important and positive role.

As the use of “impolite utterances” or “mock impoliteness” has been identified as a form of interaction that serves to prepare members of the LGBTQ community to cope with hostility, automated analyses that disregard such a pro-social function have significant repercussions on their ability to reclaim those words and reinforce harmful biases.

One possible reason behind such distortions and biases is the challenge for AI technologies to analyse context. Perspective’s tool seem to rely much more on the “general” perceived toxicity of given words or phrases instead of assessing the toxicity of ideas or ideology, which can be very contextual. These discrepancies probably also stem from biases in the training data, which has been a topic of intense debate in computer science communities dealing with AI technologies, particularly in natural language processing (NLP).

How can this concretely impact LGBTQ speech?

Just recently, Jigsaw launched Tune, an experimental browser plugin now available for Chrome, which uses Perspective to let users turn a dial up or down to set the ‘volume’ of online content. At launch, Tune works across different social media platforms including Facebook, Twitter, YouTube and Reddit. Users are able to turn the knob up to see everything, or turn it down all the way to a “hide all mode” to replace toxic comments with small colored dots. Tune markets itself around the idea that “abuse and harassment take attention away from online discussions. Tune uses Perspective to help you focus on what matters.”

In “hide all mode”, this is how the Twitter profile of drag queen Yuhua Hamasaki looks like:

The purple dots are tweets that have been hidden. According to our research, taken individually, 3,925 tweets from drag queens were considered to have perceived levels of toxicity that are higher than 80% (around 3.7% of the total amount of analyzed tweets), which means they all would have been hidden from users of Tune.

Our qualitative analysis of individual tweets also illustrates how these biases have significant implications for LGBTQ speech. If AI tools with such embedded biases are implemented to remove, police, censor or moderate content on the internet that may have profound impacts on the ability of members of the LGBTQ community to speak up and reclaim the use of “toxic” or “harmful” words for legitimate purposes, as we can see below.

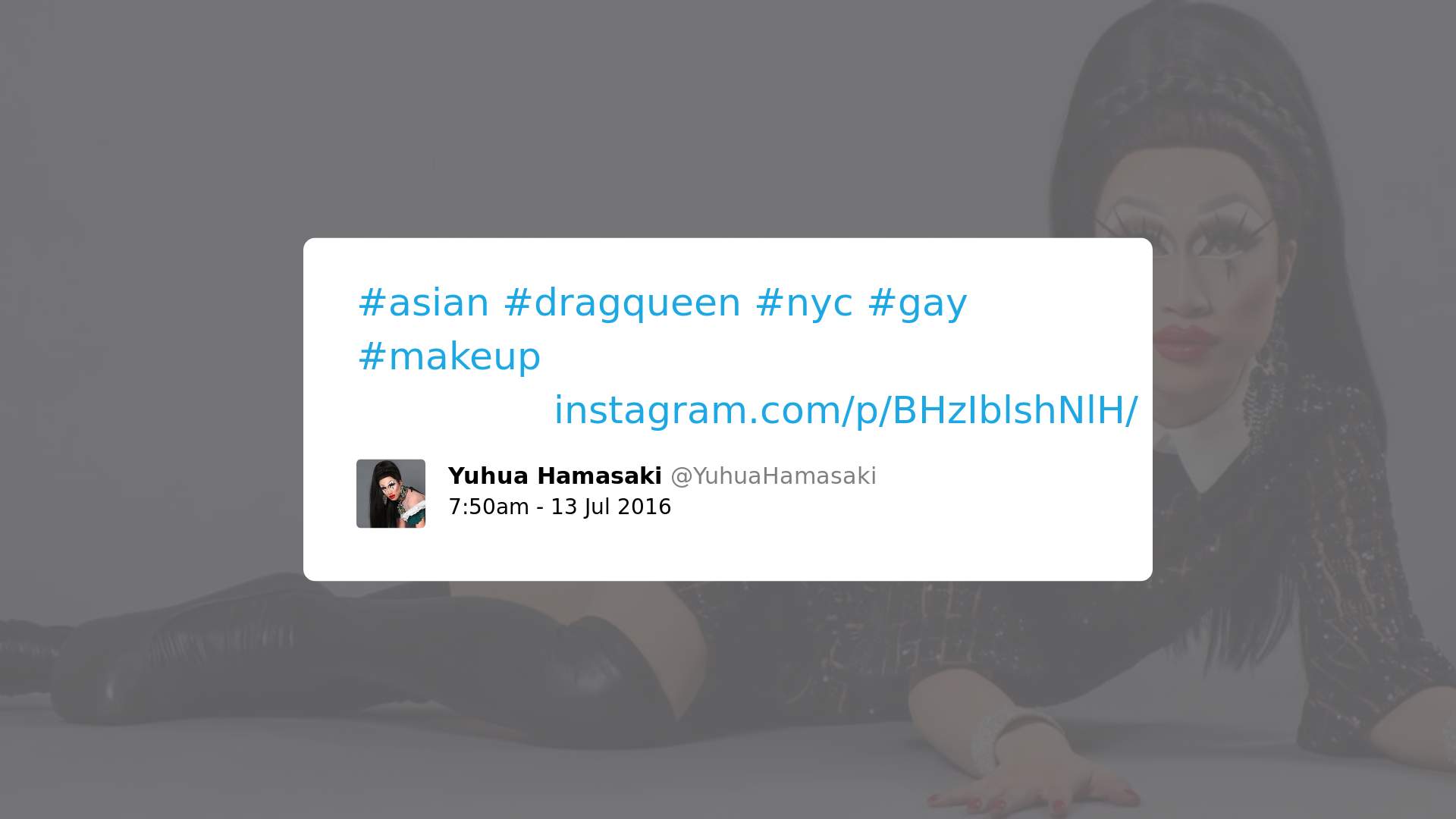

1. Cases in which the content should be considered neutral, but was likely considered toxic by Perspective because of a given word, like “gay” or “lesbian”:

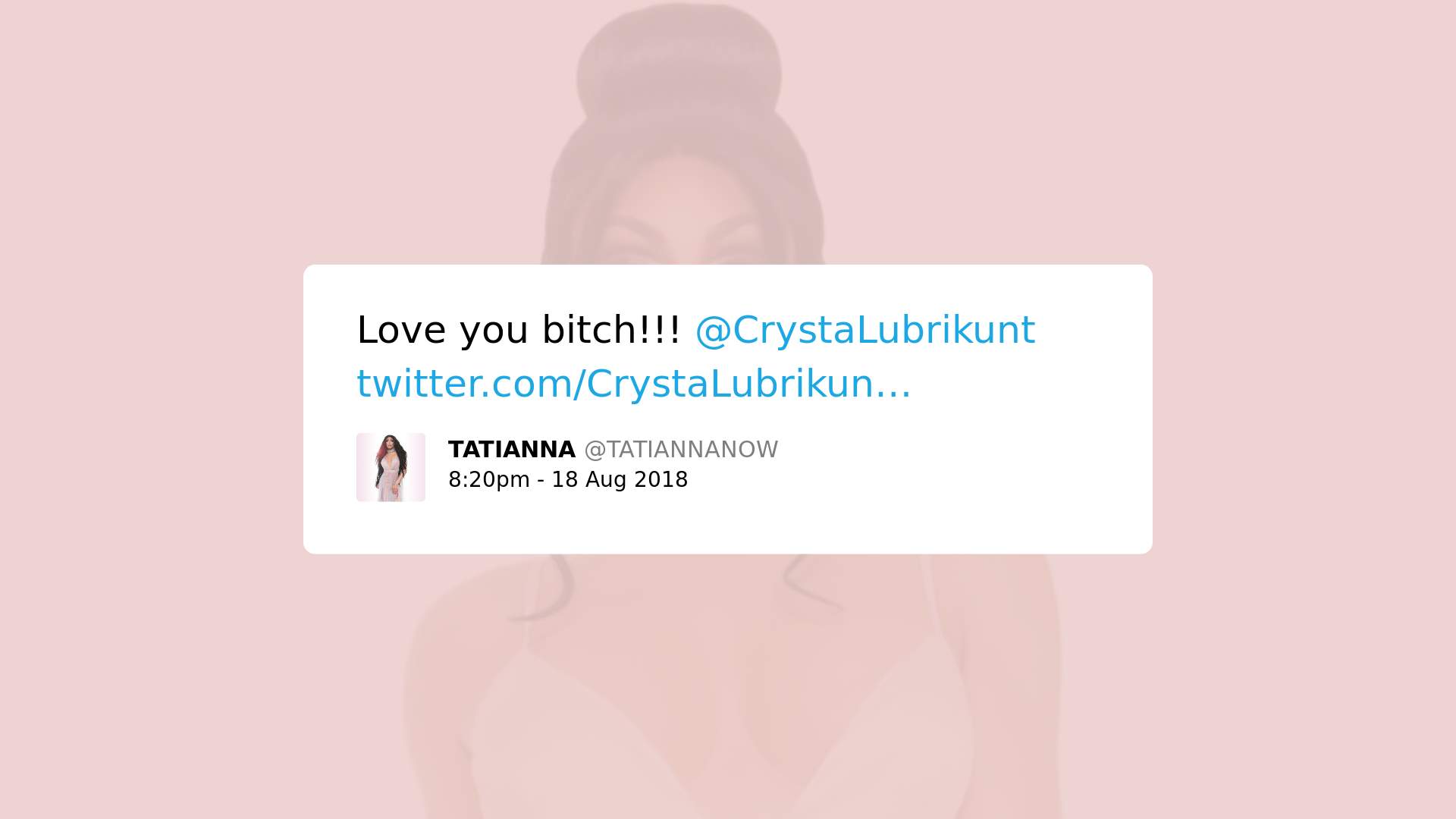

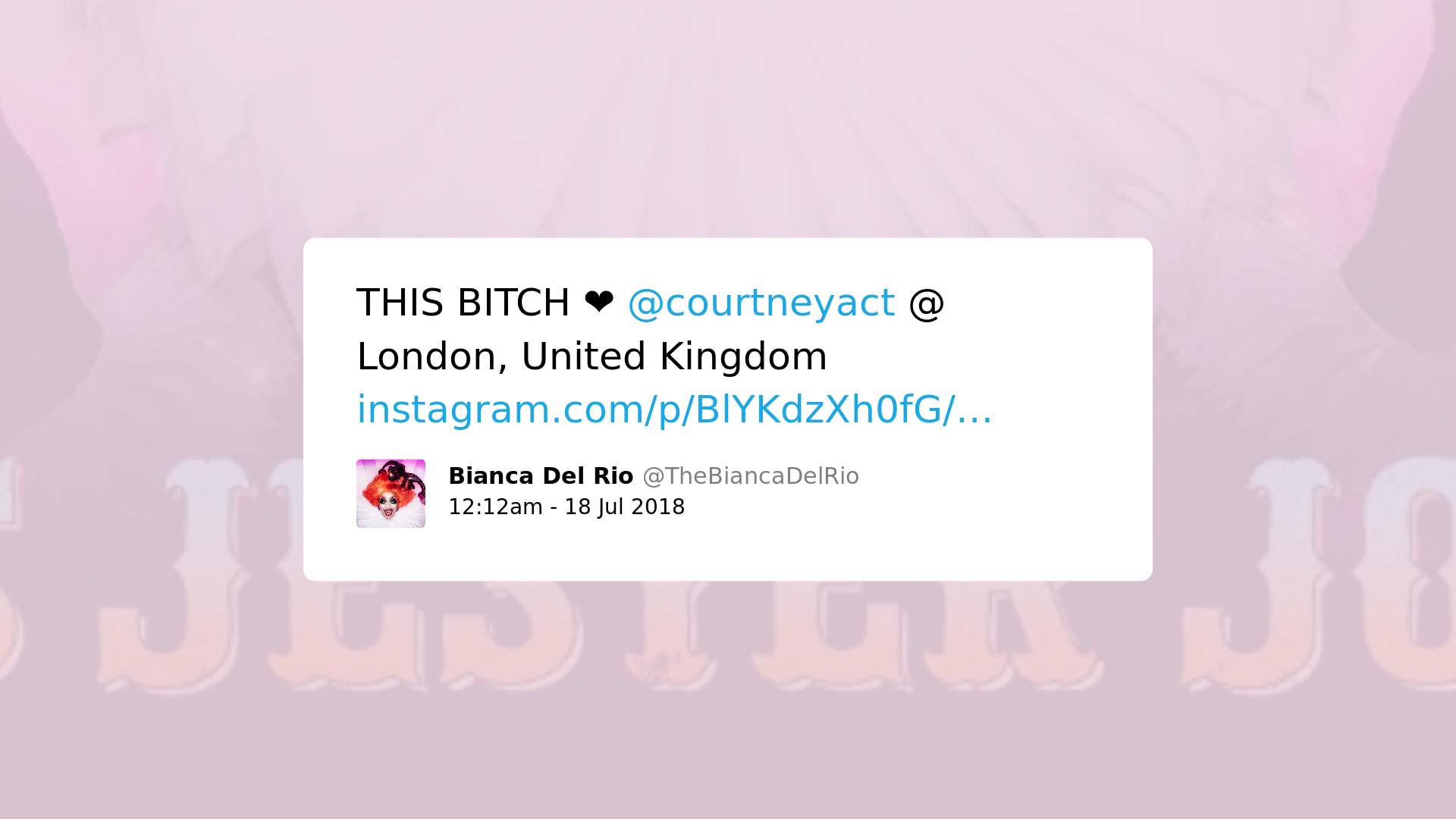

2. Cases in which a word used could be considered offensive, but could also convey a different meaning in LGBTQ speech (even a positive one):

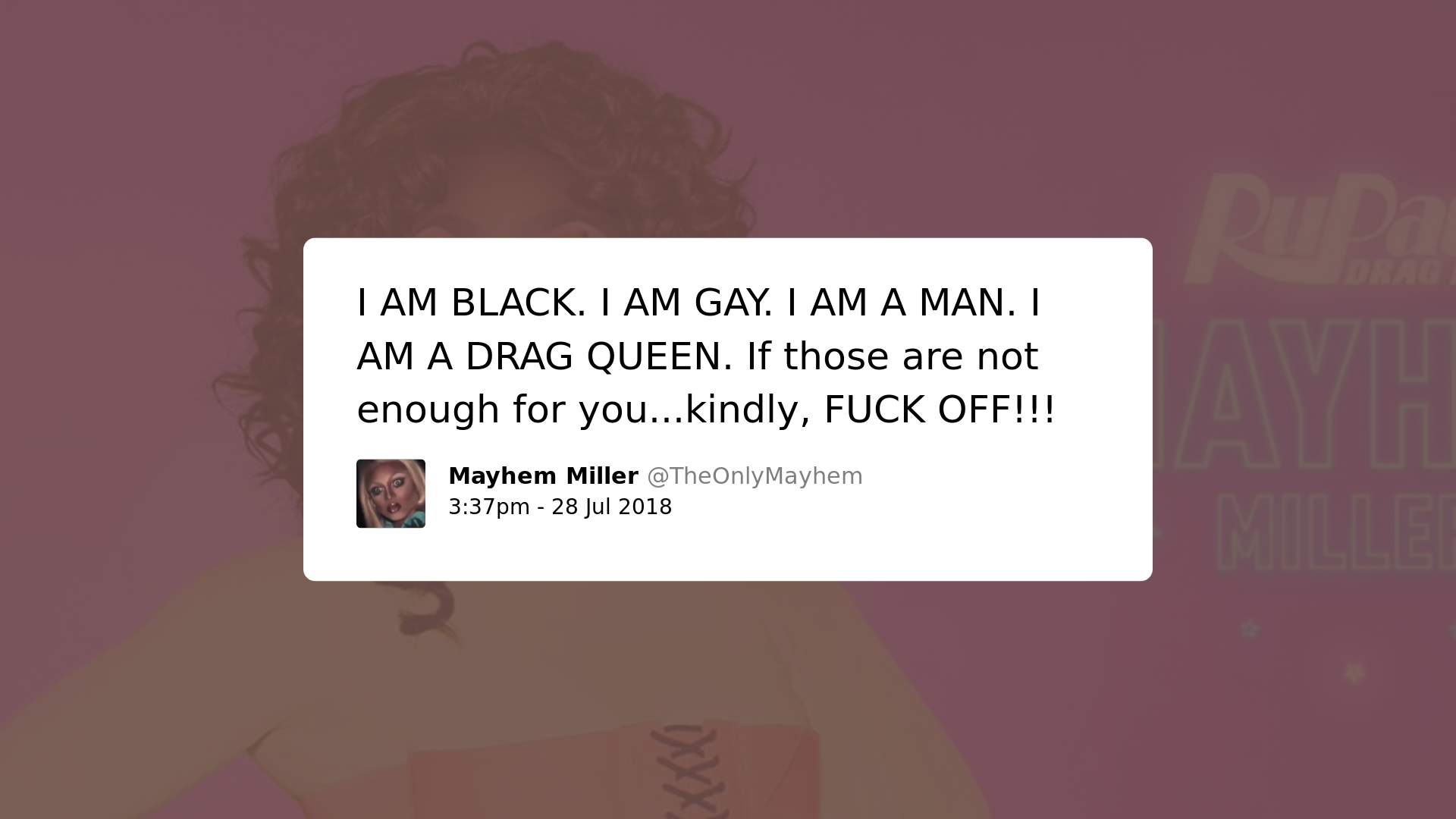

3. Cases in which words or expressions commonly used to attack LGBTQ people are reclaimed by LGBTQ people:

4. Cases in which drag queens may actually use “toxic” language, but with a social and political value, often times to denounce or speak up against homophobia and other forms of discrimination, such as sexism and racism:

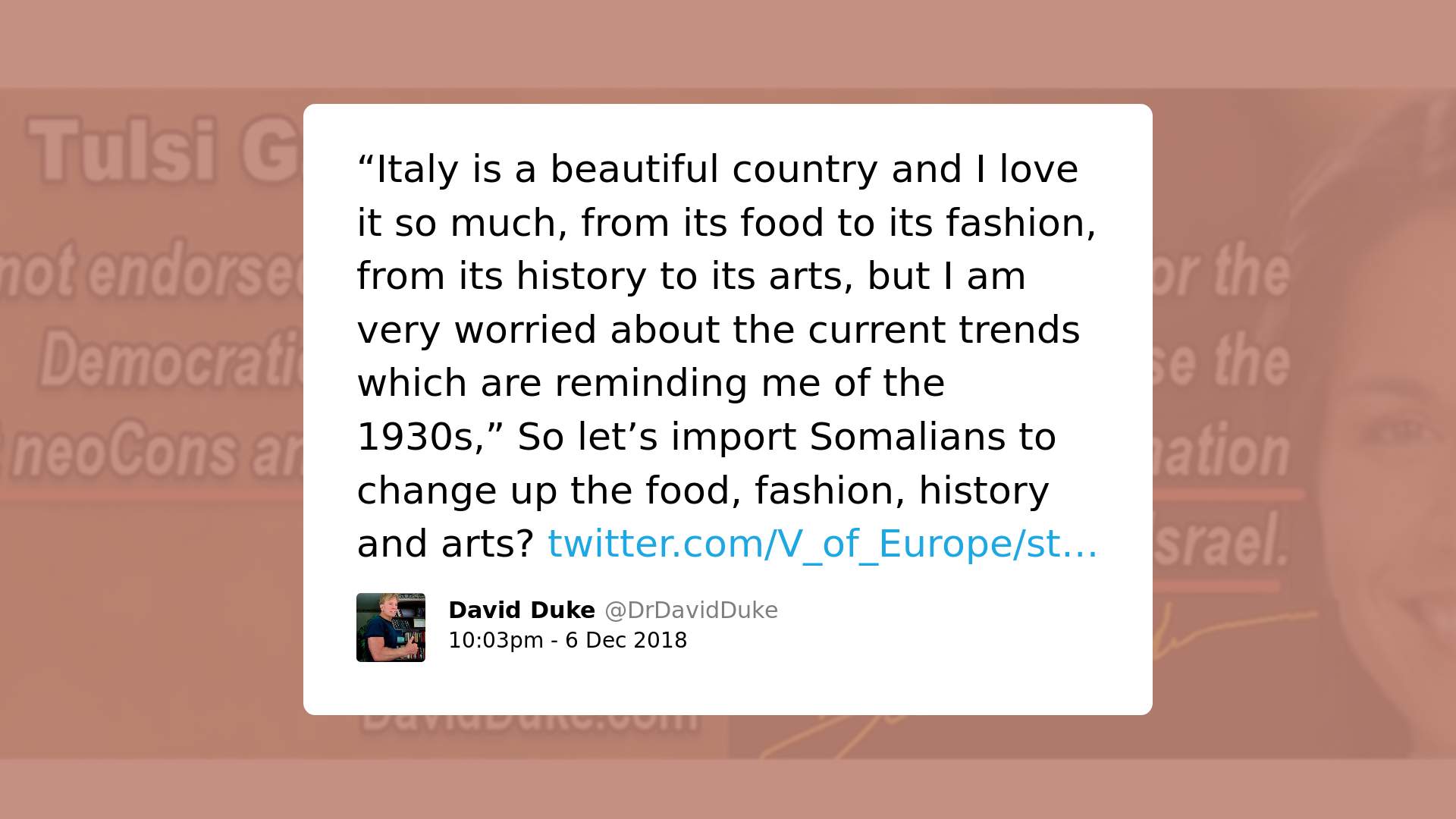

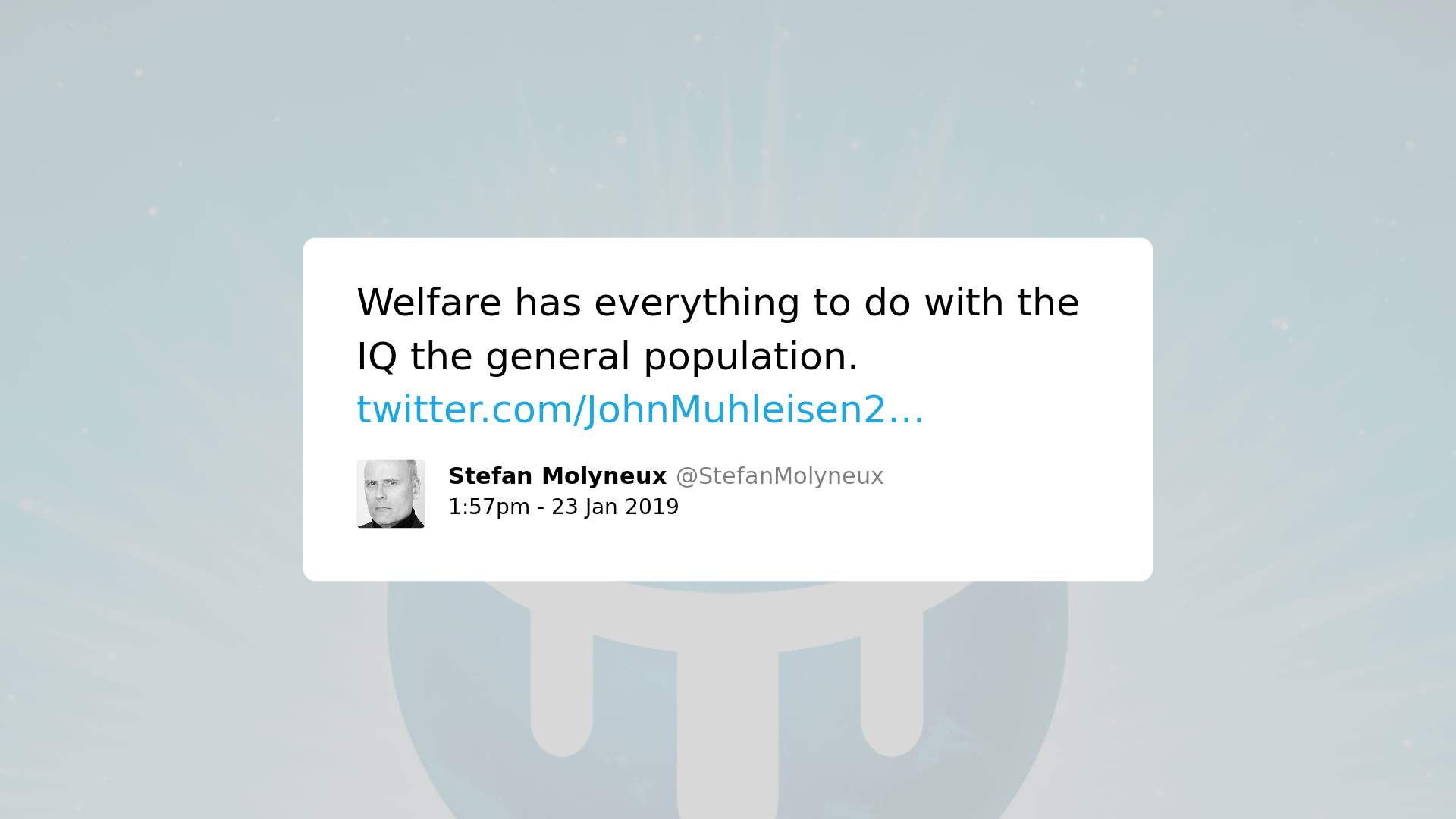

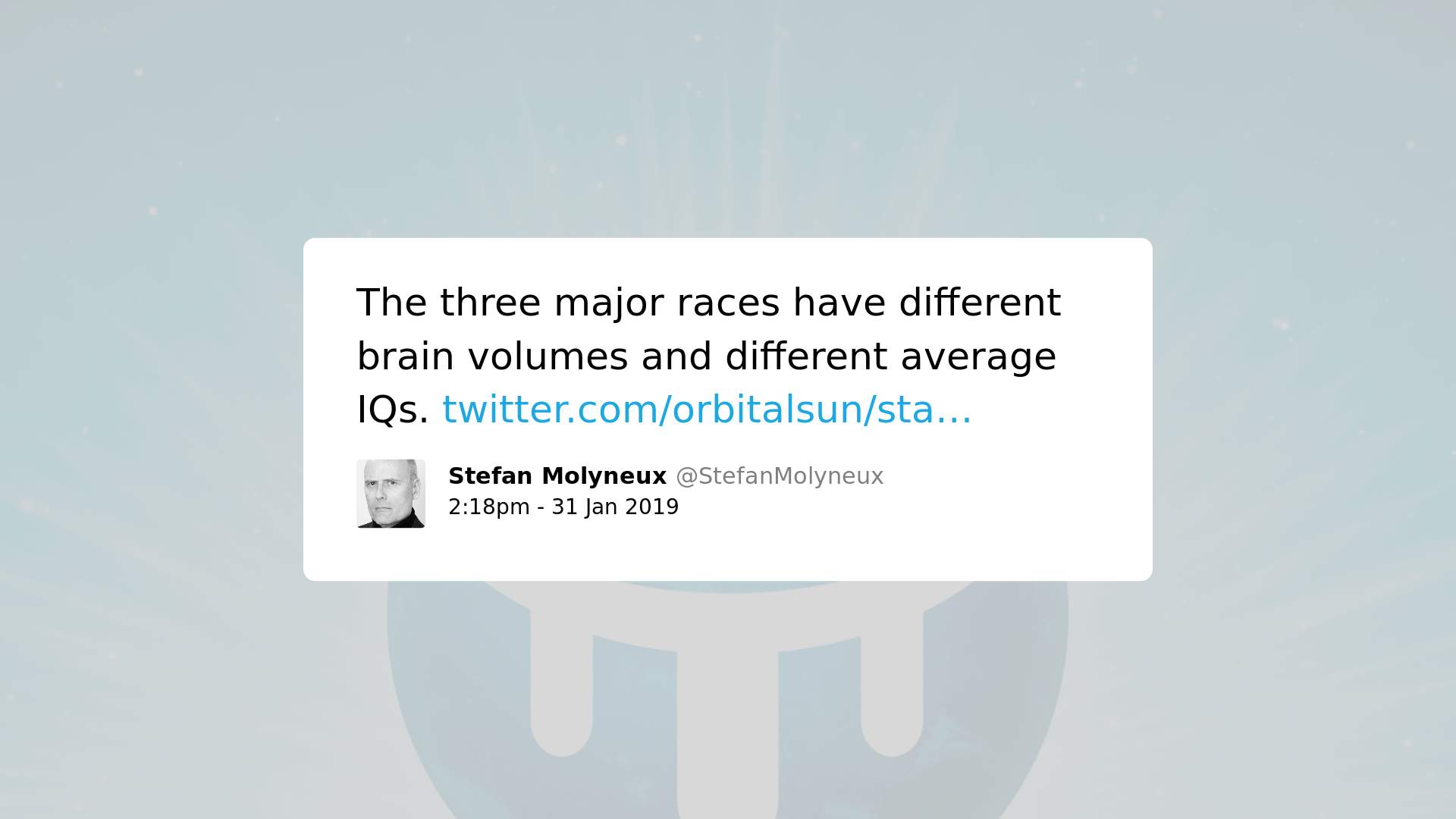

Here are examples of tweets from white supremacists promoting content and ideas that could be considered as ‘hate speech’ that had low levels of toxicity:

What does that mean for the future of speech of marginalized groups?

Given the specific communicational practices of the LGBTQ community, particularly of drag queens, the use of Perspective – as well as other similar technologies – to police content on internet platforms could hinder the exercise of free speech by members of the community. Since AI technologies such as Perspective do not seem to be fully able to grasp social context and recognize specific content as socially or politically valuable – as it is the case with the LGBTQ’s “insult rituals” – it is likely that automated decisions based on them would suppress legitimate content from LGBTQ people. This is particularly interesting when one of the main reasons behind the development of these tools is exactly to deal with hate speech targeting marginalized groups such as LGBTQ people. If these tools might prevent LGBTQ people to express themselves and speak up against what they themselves consider to be toxic, harmful or hateful, they are probably not a good solution.

In the long term, there also needs to be a more profound discussion about how those tools might impact, change and shape the way we all communicate. If an AI tool considers the word “bitch” to be “inappropriate” or “toxic”, does that mean we should stop using it? If computers decide what is “toxic” on the internet, what does that mean to the future of speech and to the way we decide to express ourselves, on the internet and outside of it?